Across the country, we see evidence that students are learning at slower rates than in years past, particularly in mathematics. For example, Curriculum Associates’ researchers found an additional 6% of students were not ready to access on-grade instruction in mathematics in Fall 2020 compared to historical trends. My former colleagues at NWEA found student achievement in mathematics in Fall 2020 was about 5 to 10 percentile points lower than in Fall 2019. In South Carolina, where 62% of students were eligible for the National School Lunch program in 2019, the projections of the percentage of students whose learning is on track for proficiency by the end of this year is notably lower than in years past. Most worrisome–we know we are missing students. To paraphrase Robert Fulghum, who wrote “All I Really Need to Know I Learned in Kindergarten,” to optimize learning recovery we need to hold hands, stick together, and work to accelerate learning.

Supporting learning recovery requires that we (1) optimize learning tasks to the student’s stage of development to target learning experiences just where the student needs support, (2) facilitate student growth to the next stage of sophistication by fostering and rewarding self-regulation, and (3) treat all assessments formatively.

To accomplish these three goals, we must rethink assessment development and use. To be effective tools for accelerating student learning, assessment development and use must be synergized with findings and processes from the multidisciplinary learning sciences field. This is important if we want both classroom and large-scale assessments to serve teaching and learning, not just accountability. Why? Because together we must center our focus on understanding and cultivating the cognition students need to show for more advanced knowledge and skills in the content area that represents College and Career Readiness.

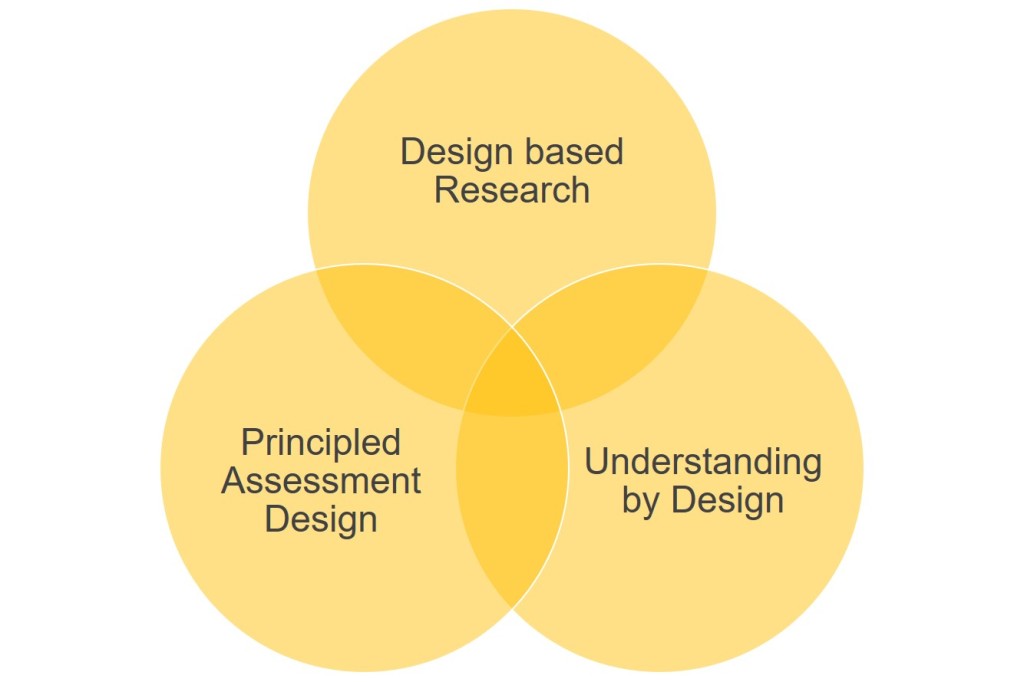

In this three-part blog series, I argue our development and use of assessments across the educational ecosystem needs to synergize practices with the learning sciences. I am going to introduce the learning sciences, talk about design-based research, and show connections to assessments developed to understand how students grow in cognition in the content areas from the learning sciences field. In my next blog, I will show examples of synergizing classroom and large-scale assessments using principled assessment design, which is similar to the learning sciences design-based research approach. Finally, I will connect this work to curriculum design, most especially, to Understanding by Design.

Learning Sciences

The field of learning sciences is multidisciplinary. It often focuses on understanding and exploring how learning occurs in real-world contexts that increasingly are examined through technology. Sommerhoff et al., (2018) defined learning sciences in this way:

[The} learning sciences target the analysis and facilitation of real-world learning in formal and informal contexts. Learning activities and processes are considered crucial and are typically captured through the analysis of cognition, metacognition, and dialog. To facilitate learning activities, the design of learning environments is seen as fundamental. Technology is key for supporting and scaffolding individuals and groups to engage in productive learning activities (p. 346).

Learning scientists often distinguish between recall of facts and deeper conceptual knowledge. They also focus on the contexts and situations in which students learn and show their thinking as they grow in expertise. Cognition theories are important. Situating learning in personally relevant contexts (that include the student’s culture) with sufficiently complex, authentic, and interesting tasks that facilitate learning are also focal points. While the learning sciences field is large and encompasses researchers from areas such as cognitive psychology, computer science, the content areas such as science, mathematics, reading among others, ironically psychometrics, the field in which I work, is not often included or discussed.

As practitioners and theorists, O’Leary et al. (2017) noted that psychometricians and test developers are focused on the technical evidence in support of score interpretations and test score uses that they develop. This does not mean, however, that the test developer interpretations are useful to teachers, or that test developers always validate the actual score interpretations. We seldom collect evidence that teachers find proposed test score interpretations instructionally useful in advance of creating tests, and whether interpretations describe student behaviors in ways that are helpful to instruction. There are, however, a growing group of psychometricians who, like myself, recognize the ways we develop assessments needs to evolve to provide better information for teachers and parents. These evolving practices are similar in strategy to the design-based research practices discussed in the learning science literature at points in the assessment development cycle. The synergy between instructionally useful assessments and the learning sciences begins with the notion that assessments have the goal of showing validity and efficacy evidence that they are designed to and actually do help teachers understand and predict how learning occurs to support instructional actions.

Design-based researchers often do two things at the same time. They put forth a theory of learning and collect evidence to determine if a theory can be supported or uncovered through iteration. The goal of such research is to develop evidence-based claims that describe and support how people learn. To investigate such theories, learning scientists carefully engineer the context and evidence collection in ways that support an intended positive change in learning. Sommerhoff et al. (2018) show in their network analysis of learning sciences that what we want to understand are areas of student cognition, learning, and motivation (among others); these are the outcomes of import. These areas are what we want to make inferences about as we observe and teach students. Learning scientists use design-based research, assessment, and statistics (and other techniques) as methods of investigating these outcomes.

The merger of what we want to understand to support students and how we use assessment and design-based research to collect evidence for such inferences is exemplified by Scott et al. (2019). They describe the following design process.

- Researchers use qualitative methods and observations to identify the various ways students’ reason about the topic of interest as they develop mastery, including vague, incomplete, or incorrect conceptions.

- The findings are ordered by increasing levels of sophistication that represent cognitive shifts in learning that begin with the entry level conceptualization (lower anchor) and culminate with the desired target state of reasoning (the upper anchor).

- Middle levels describe the other likely paths students may follow.

- When possible, the reasoning patterns described in the intervening levels draw from research in the cognitive and learning sciences on how students construct knowledge.

- Assessment instruments are the tools that researchers use to collect student ideas to construct and support the learning framework.

- The tasks students are asked to engage in on the assessment elicit the targeted levels of sophistication that represent the concepts of the hypothesized learning progression.

- Evidence is found to support, disconfirm, or revise the progression.

Shepard wrote that assessment developers and psychometricians need to know the theory of action underlying learning, and she noted, “a certain amount of validation research is built into the recursive process of developing learning progressions.” Design-based research has some overlap with a newer design-based methodology for creating large-scale educational assessments called principled assessment design. This approach can also be used for classroom assessments. Examples of a PAD approach will be the focus of my next blog. In the meantime, here is a graphic foreshadowing where we are going to unify our educational ecosystem to ensure we accelerate and recover learning opportunities for students efficiently, together. We all can contribute to creating systems that support better understanding of where students are in their learning and what they likely need next. Let’s hold hands, stick together, and do this!

Leave a comment