A key aspect of formative assessment is that teachers collect and interpret samples of student work or analyze items to diagnose where students are in their learning. Busy teachers are faced with two choices. They can count the responses answered correctly by topic and move on; or stop, grab a cup of steaming hot coffee, and spend time analyzing features of items and student work that are associated with different stages of student cognition in the content area. This latter approach takes a lot of time (and a lot of coffee).

If a state’s theory of learning regarding how students grow to proficiency is not available or widely publicized it is difficult for the teacher to align assessment tasks to reveal where a student is located on a continuum of proficiency and beyond. The teacher cannot predict where a student is currently along that continuum. Therefore, instructional adaptations and judgments regarding whether a student has mastered standards become less certain.

How likely is it that two teachers who use the same curriculum materials and standards in different areas of a state create classroom assessments to investigate a continuum of proficiency in the same way? Even when teachers carefully use state standards and the same curriculum to guide teaching, instruction, and assessment, differences in levels of mastery judgments can and do occur. Standards and curriculums are necessary and essential foundations to support student learning, but insufficient, to support the same learning opportunities. Learning opportunities are often determined on the ground by teachers who know students best, often based on their classroom assessment results. However, my 19 years of working with teachers, curriculum specialists, and professional item writers have taught me different stakeholders have different interpretations of what the journey to proficiency looks like. These different perspectives are likely to drive different instructional decisions. If we want to accelerate student learning, we need a common evidence-based framework to help us identify where students are in their learning throughout the year that is used in all parts of the state’s educational ecosystem.

In my first blog of this series, I argued our development and use of assessments across the educational ecosystem needs to synergize practices with the learning sciences. In this blog, I discuss specifically how we can create instructionally sensitive and useful assessments. This design framework requires the use of evidence, both in the design of the assessments and in analysis of the item level results against the design, to support the claim that the learning theory being described is reasonably true. This evidence-centered design branch is called principled assessment design using Range ALDs (RALDs).

Principled Assessment Design

Principled assessment design using RALDs has been proposed as an assessment development framework that centers a state’s stakeholders in first defining its theory of how student knowledge and skills grow within a year. Learning science is used where research evidence exists along with teachers, researchers, and item writer judgements. During this process, stakeholders define the contexts, situations, and the item types which best foster student learning and allow students to show their thinking as they grow in expertise. They develop companion documents that provide guidelines for how to assess. When items are field tested, additional analyses beyond what is standard in the measurement industry are conducted to check alignment of items to the learning theory, and the state collects diverse teacher feedback on the utility of the score interpretations and companion documents for classroom use, all before the assessment ecosystem is used operationally. The vision for this framework is to align interpretations of growth in learning across the assessment ecosystem using initial evidence from large-scale assessments and teacher feedback to support or revise materials as needed to ensure the system is fully aligned for all students.

Range ALDs

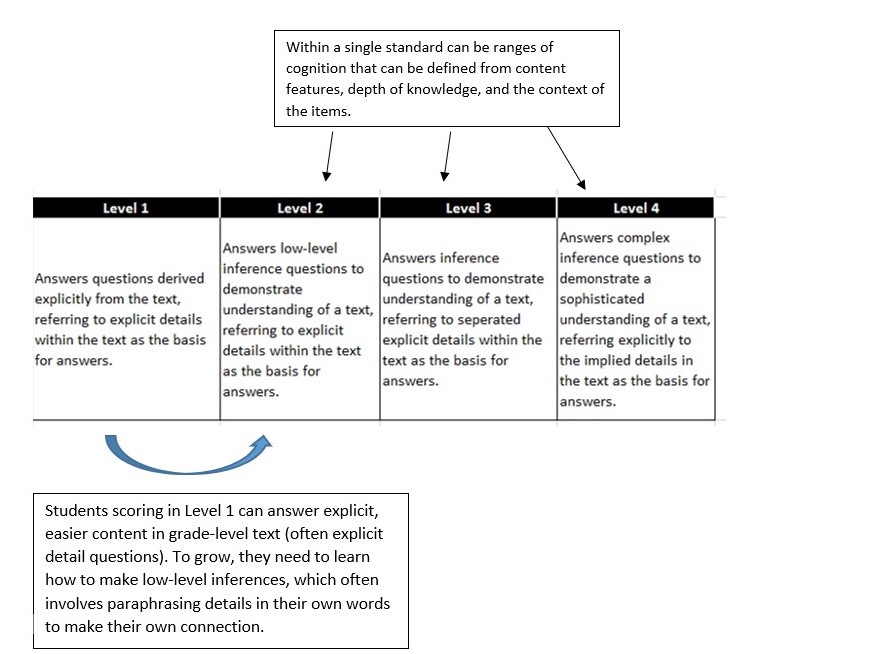

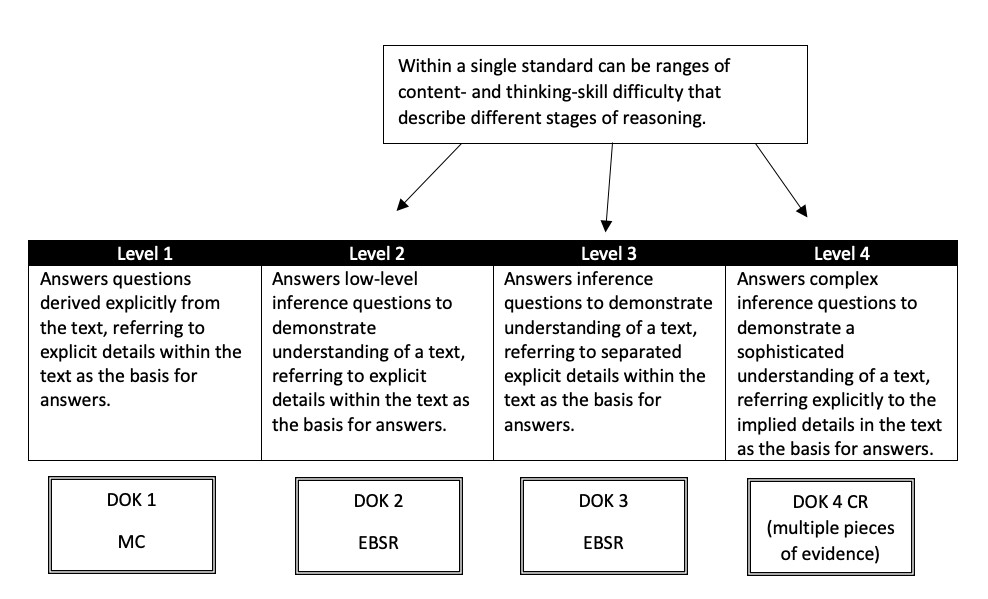

RALDs describe the ranges of content- and thinking-skill difficulty that are theories of how students grow in their development as they integrate standards to solve increasingly more sophisticated problems or tasks in the content area. They are essentially cognition-based learning progressions. Why? States neither prescribe curriculum nor the order in which to sequence and teach content. RALDs are intended to frame how students grow in their cognition of problem solving within the content area agnostic to the areas of local educational control. They are critical for communicating how tasks can align to a standard and elicit evidence of a student’s stage of learning, but the thinking a student is showing does not yet represent proficiency. Thus, the student’s cognition still needs to grow as shown in Figure 1.

Range ALDs hold the potential to reduce the time it takes teachers to analyze the features of tasks that only require students to click a response, which have become pervasive in our instructional and assessment task ecosystem. Teachers can quickly match the features of tasks to the cognitive progression of skills to identify where in the continuum of cognition an item is intended to show student thinking. Teachers can then identify where students are in their thinking and analysis skills, by matching the items a student answers correctly to the cognition stage. Even better, district-created or teacher-created common assessments could pre-match items to the continuum to save valuable time. Such an approach allows teachers, districts, and item writers to use the same stages of learning when creating measurement opportunities centered in how thinking in the content area becomes more complex. This supports personalizing opportunities to measure students at different stages of learning in the same class. For example, students in Stage 1 in text analysis need more opportunities to engage in inferencing rather than retrieving details from the text.

The notion of adapting measurement and learning opportunities to the needs of a student is a principal of learning science. RALDs are intended to help teachers estimate the complexity of tasks they are offering students and compare them with the range of complexity students will see at the end of the year on a summative assessment. Interestingly, such an approach aligns to the Office of Educational Technology’s definition of learning technology effectiveness. “Students who are self-regulating take on tasks at appropriate levels of challenges, practice to proficiency, develop deep understandings, and use their study time wisely” [23, p. 7]. While the teacher can target the tasks to the students, the teacher can also empower students to practice making inferences as they read texts independently and together in the classroom. Curriculum and instruction set the learning opportunities; the tasks are used to locate a child on their personal journey to proficiency.

How and when does a state collect validity evidence to support such an approach?

Scott et al., wrote that assessments support the collection of evidence to help construct and validate cognition-based learning progressions. Under the model I describe, the state is responsible for collecting evidence to support the claims that the progressions are reasonably true. The most efficient place to do this is through the large-scale assessments it designs, buys and/or administers. This approach requires an evidenced-based argument that the RALDs are the test score interpretations, and the items aligned or written to the progressions increase in difficulty along the test scale as the progressions suggest. Essentially, to improve our system we are using the assessment design process to

- define the criteria for success for creating progressions at the beginning of the development process,

- use learning science evidence where available, and

- collect procedural and measurement evidence to empirically support or refine the progressions.

The first source of validity evidence is documenting the learning science evidence used to create the progressions in the companion document for the assessments that teachers, districts, and item developers use. Such evidence sources often describe not only what makes features of problem solving difficult, they often suggest conceptual supports to help students learn and grow. This type of documentation is important to support content validity claims centered in an assessment that does more than measure state standards. This is an assessment system designed to support teaching AND learning.

Item difficulty modeling (IDM) is a second way to collect empirical evidence. When conducting IDM studies, researchers identify task features that are expected to predict item difficulty along a test scale, and these are optimally intertwined in transparent ways during item development as shown in Figure 2. It is critically important to specify item types for progression stages because of research that suggests item types are important not only in modeling item difficulty but also in supporting student learning.

A third approach to validating progressions is to look at identifying items along the test scale that match and do not match the learning theory and make decisions about either removing or editing the non-conforming items or editing the non-conforming progressions. The process I am describing is iterative. It can be done during the development and deployment of a large-scale assessment simply by rearranging many of the traditional assessment development processes into an order that follows the scientific process. I believe this process is less difficult than we think. We simply need to get in a room together with our cups of coffee and outline the process and evidence collection plan before beginning the adventure! The conscious choices of uniting our assessment ecosystem centered in a common learning theory framework with transparent specifications and learning science evidence is what makes assessment development principled (page 62). That is, task features, such as constructed response items, are strategically included by design in certain regions of the progression. Being transparent about such decisions and sharing the learning science evidence upon which decisions are based allows teachers to use assessment opportunities in the classroom as opportunities to support transfer of learning. Transfer of learning to new contexts and scenarios within a student’s culture is critical for supporting student growth across time. This, in turn, ensures that teachers and students are using the same compass, and they are framing their interpretations of what proficiency represents in similar ways which promotes equal opportunities to learn. This also allows large scale assessments to contribute to teaching and learning rather than being solely relegated to a program evaluation purpose. It is incumbent upon all of us to ensure that students get equal access to the degree of challenge the state desires for its students.

The synergy between assessments and the learning sciences begins with the notion that assessments can be designed to support teaching and learning. We must have the goal of showing validity and efficacy evidence that such assessments are designed to and actually do support teachers. To produce such assessments, we use principled approaches to assessment design and work to improve the score interpretations. We collect sources of evidence that are growing in use to evaluate if more nuanced score interpretations can be supported. We provide professional development for pre-service and in-service teachers on using the materials, and critically, we collect information regarding whether, if used accurately, RALDs help teachers analyze student thinking in the classroom. In the final blog of this series, I am going to explore embedding this framework into the Understanding by Design curriculum process. I have my coffee pot and creamer ready to go!

Leave a comment